Why we don't Use Eyeball Tracking in our AI Proctoring

When people think about online exams and cheating, one of the first concerns they raise is: What if a test-taker looks at their phone or another device? This is why eyeball tracking is often suggested as a powerful solution in AI proctoring. If a system can detect when a test-taker’s eyes are moving away from the screen, wouldn’t that be a great way to identify potential cheating?

At AutoProctor, we had this same thought when we first launched in 2020. In fact, our early versions of AutoProctor included eyeball tracking. However, after real-world testing and user feedback, we realized that eyeball tracking wasn’t as effective as it seemed in theory. Instead, it resulted in a frustrating experience for test-takers and test administrators alike. Here’s why we decided to remove it.

What is Eyeball Tracking and Why is it Considered Useful?

Eyeball tracking monitors where a person is looking. In online proctoring, it can track whether a test-taker’s gaze remains fixed on the screen or frequently moves away. The assumption is simple: if someone’s eyes are off-screen too often, they might be looking at notes, a phone, or another device.

This seems like a great way to detect suspicious behavior. If a student keeps glancing to the side, it could mean they are checking reference material or communicating with someone nearby. This is why many proctoring tools use gaze tracking as part of their cheating detection algorithms.

We Were Technically Capable of Implementing It

AutoProctor had the technical capability to implement and refine eyeball tracking. Using the webcam feed, our AI model could analyze gaze direction and detect when a test-taker’s eyes strayed from the screen for extended periods. If a candidate’s gaze pattern suggested potential misconduct, we flagged it as a violation.

Why We Removed It

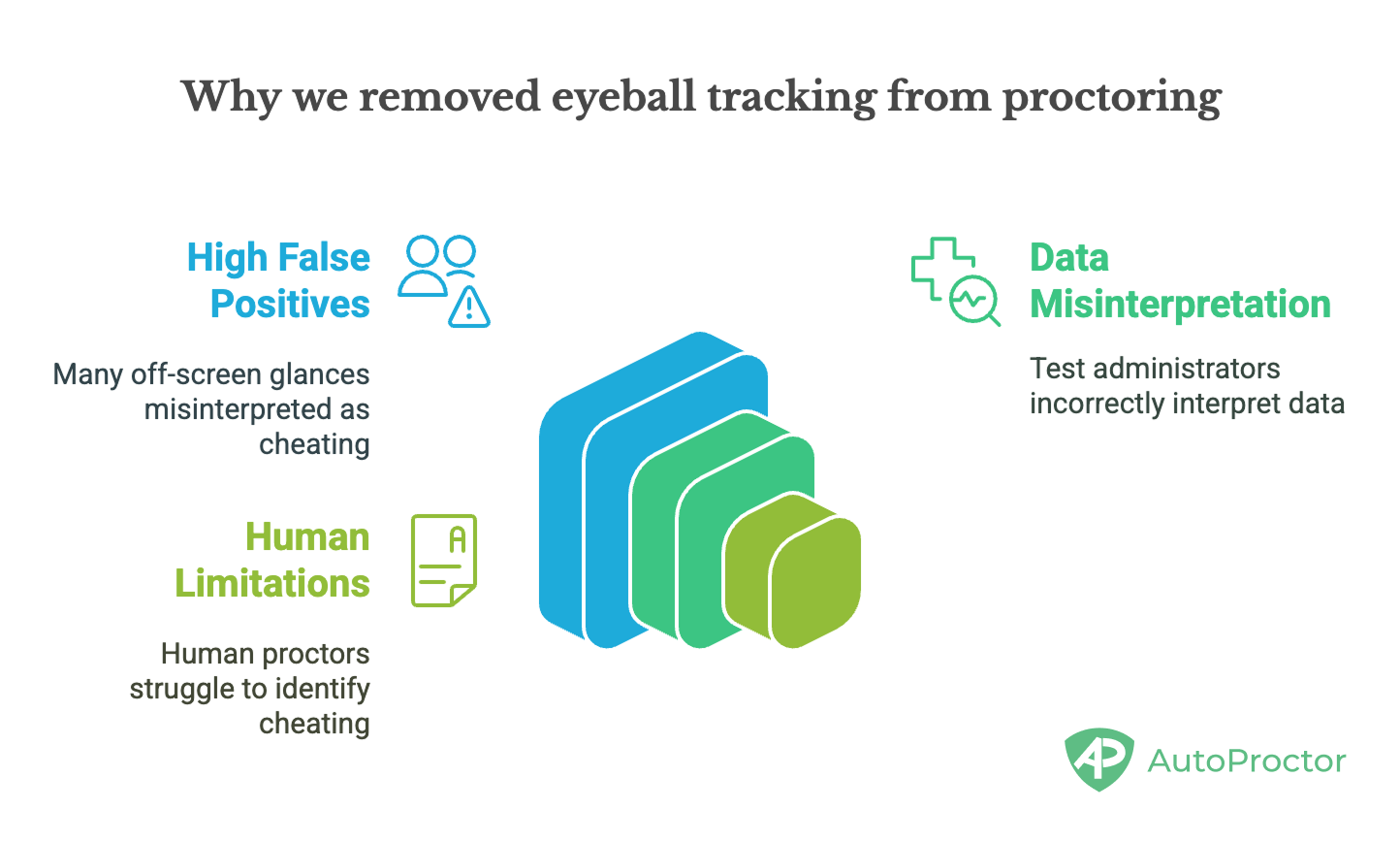

Though eyeball tracking seemed promising, we encountered significant issues when putting it into practice. Here are the main reasons we decided to drop it:

1. High False Positive Rate

One of the biggest problems with eyeball tracking was the sheer number of false positives it generated. Not every off-screen glance means cheating. In fact, during an exam, it is natural for test-takers to look away from the screen while thinking. When solving a problem, recalling information, or formulating an answer, people tend to look up, down, or to the side as they process their thoughts.

Unfortunately, the AI model couldn’t differentiate between genuine thinking behavior and actual cheating. This led to many test-takers being unfairly flagged.

2. Test Administrators Misinterpreted the Data

We advised test administrators not to rely solely on the trust score and to always review the captured images before making a judgment. However, many administrators skipped this step and treated the AI-generated score as the final verdict.

This was problematic because a candidate could be falsely accused of cheating simply because they were thinking deeply and looking around. When test administrators took the trust score at face value, it created an unfair experience for test-takers.

3. Even a Human Can’t Tell if Someone is Cheating Just by Looking

Imagine you are monitoring a live video feed of a candidate taking an exam. The candidate looks to the side for a few seconds, then back at the screen. Just by watching this behavior, would you immediately conclude that they are cheating? Probably not.

Even as humans, we cannot always tell whether someone is cheating just by observing their gaze. The context matters: Are they deep in thought? Are they distracted by something in their environment? Are they simply experiencing eye strain?

If a human proctor can’t make a confident decision based on gaze behavior alone, then how can an AI model do any better? AI, after all, is only as good as the data it is trained on. Since gaze movement is not a definitive indicator of cheating, relying on AI to make such a determination leads to unreliable outcomes.

Conclusion

Though eyeball tracking might sound like a foolproof method for preventing cheating, real-world experience has shown that it introduces more problems than it solves. With high false positives, misinterpretation of data, and the fundamental issue that even humans cannot reliably assess cheating based on gaze behavior alone, we determined that it was not the right approach for AutoProctor.

By focusing on more reliable signals of suspicious behavior and encouraging human oversight, we ensure a more accurate and just proctoring experience for everyone involved.